This site is a JamSTACK Site

What is it?

It’s commonly said that the cloud is just “someone else’s computer.” However, it’s more accurate to say that the “cloud” is a mindset for managing applications, taking advantage of economies of scale through extremely large data centers.

In the world of web development this has led to the popularity of JamSTACK, which uses cloud abstractions to optimize website architectures.

At the most basic level, JamSTACK involves serving static web content through a CDN. Cloud platforms allow developers to use a CDN as a frontend for storage services, such that from a developer’s perspective there is no server.

In the modern world of dynamic web applications, static websites may seem like a step backwards, but consider that cloud computing allows for more than just cost-saving architectures – these services allow for a much more flexible approach to managing applications, including websites. Common “CI” practices like source control with automated deployment pipelines can be used to push updates, so that developers can achieve the performance of serving static HTML pages with the flexibility of a CMS. Typically, simple markdown templates are compiled into a complete website with images, layout and formatting. Automating this rendering step enables a high level of flexibility, without sacrificing the performance of a static website.

Javascript (the “J”) allows this approach to be extended to dynamic content, with calls within a static webpage to microservices, often powered by FAAS tools like AWS Lambda.

The tools

- The cloud

- Static site generators

- Source control with build pipelines

How I’m using these concepts

I like simplicity, and I’m weary of how modern development practices can cause unneccessary complexity compared to established practices. I don’t want to have to touch HTML, at least in terms of managing a layout, and I want to be able to quickly add posts. Typically this would mean using a CMS like Wordpress.

This was my preference until I saw an article about using a static website generator with S3. For a personal website with low traffic, the cost savings is significant – pennies per month for the cost of storage and bandwidth, versus $30 or more per month for a tiny virtual machine.

I built my first version of this kind of architecture on AWS, using Hugo along with AWS Lambda to trigger builds. I could update markdown templates on my laptop, then push these updates to S3, triggering a lambda function that ran Hugo, and copied the build to a different S3 bucket which stored the actual site served by CloudFront.

Managing the site was easy and cost was negligable, but I wasn’t a fan of the architecture, since I could easily delete the data on my laptop and in S3, destroying all of my work. When I moved to GCP I put in place source control and an automated build pipeline. In addition to making it possible to manage my site from anywhere, this also meant I could use continuous integration to reap the benefits from the safety of source control and automation.

These days I manage the site by updating markdown templates and commiting my changes. Once I’m satisfied with how the site looks on my local machine (I can test the site using a local Hugo server), I push everything to a master branch and a build pipeline kicks off, running Hugo to build the site and uploading it to Firebase.

I use Firebase because it saves me several steps, but an equivalent architecture on GCP would be to load the site into a GCS bucket behind an HTTPS Load Balancer, with the CDN option enabled. Firebase provides static website hosting with a free tier, and this saves me several dollars per month by avoiding minimum charges on GCP load balancing. My bill so far for this month is $0.01. (Of course, my bills in other projects where I tend to forget to spin down VMs are higher.)

More specifically

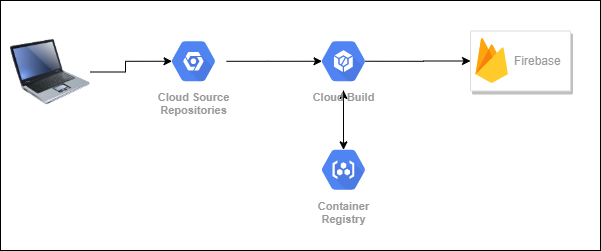

The GCP services I’m using are Cloud Source Repositories, Cloud Build, Container Registry, Secrets Manager, and Firebase.

I make changes to markdown files on my personal machine and commit to CSR. Once I push the commit, a Cloud Build task kicks off. This is orchestrated by instructions in a cloudbuild.yaml file that outlines steps. Every step in a cloudbuild.yaml file references a container image. Most of these images are purpose-built for Cloud Build, and contain standard tools like the gcloud CLI or Firebase Tools. I use Secrets Manager for passing secrets in build steps securely.

This is very similar to the process outlined in this blog post by Chris Liatas, although I did many of the initial setup tasks by hand rather than including them in the build steps.

This is the flow I currently use:

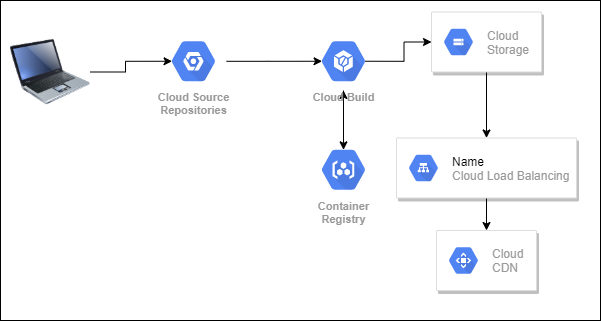

This is a mostly equivalent architecture with standard GCP services: